New DeepSeek V3-0324 Model Challenges GPT-4o and Claude-3.5

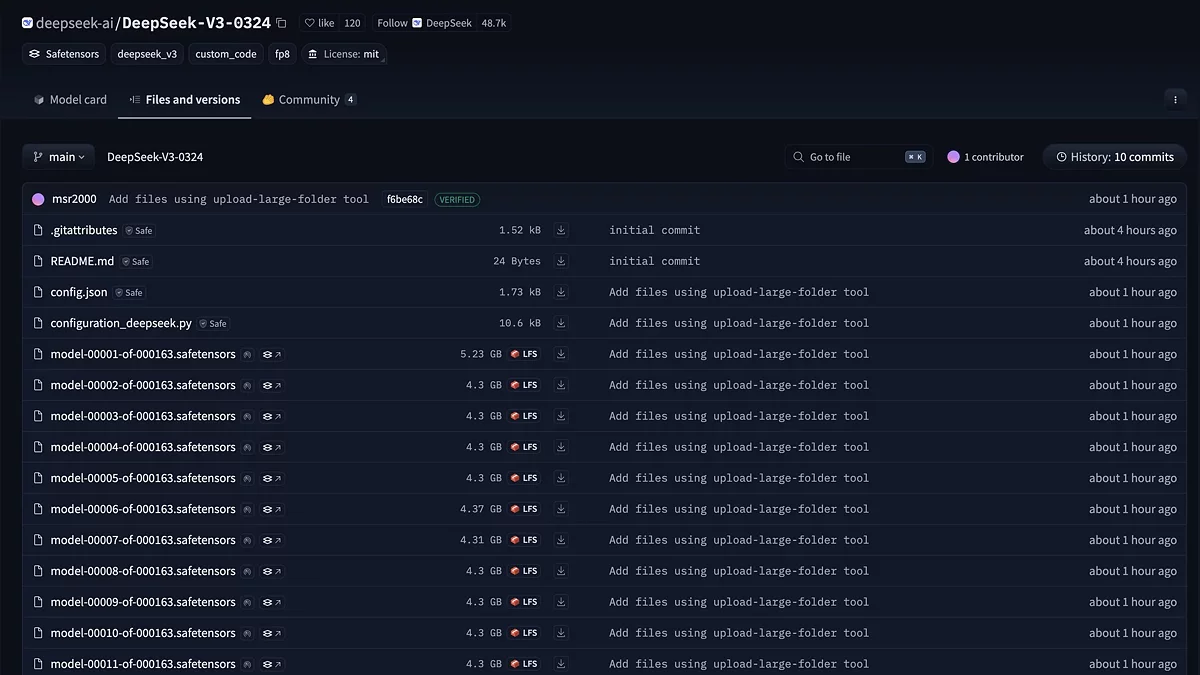

DeepSeek AI has announced a major upgrade to its flagship model — DeepSeek V3-0324. The model, available on GitHub and Hugging Face, not only catches up with but also surpasses closed alternatives like GPT-4o and Claude-3.5-Sonnet in several key areas.

At the core of the update is an enhanced Mixture-of-Experts (MoE) architecture, where 671 billion parameters are dynamically activated in chunks of 37 billion per token. The Multi-head Latent Attention technology reduces memory consumption by 60%, while Multi-Token Prediction boosts text generation speed by 1.8 times. The model has been trained on a dataset including math problems, code in 15 languages, and scientific papers. Training took 2.788 million GPU hours on H800 clusters — the equivalent of 318 years of continuous work on a single accelerator. The result: 89.3% accuracy in solving school-level math problems (GSM8K) and a 65.2% success rate in code generation (HumanEval) — 10–15% higher than previous open-source solutions.

The update has brought some unexpected improvements:

- Frontend code generation now produces visually appealing interfaces;

- Text quality has reached human-level fluency in long-form essays;

- Function calling accuracy has hit 92%, solving one of the key issues with previous versions.

While the official update notes have not yet been released, the model’s size is reportedly 700 GB. It’s available via API with a unique "temperature calibration" system: the standard parameter of 1.0 is automatically adjusted to an optimal 0.3. For local deployment, developers are offered modified prompt templates with support for web search and file analysis — a feature previously available only in premium commercial solutions.

Experts predict that DeepSeek V3-0324 could disrupt the AI assistant market for programming and data analysis. Its open-source availability under an MIT license opens the door for customization — from business process automation to the creation of specialized scientific assistants.

-

Revolution in the World of AI: How China's DeepSeek V3 Outpaces Yesterday's Market Leaders

-

Chinese AI Startup DeepSeek Faces Scrutiny Over Alleged ChatGPT-Based Training

-

Cybersecurity Experts Call for DeepSeek to Be Removed from iPhones Due to Vulnerabilities

-

NVIDIA CEO Speaks Out About Chinese AI DeepSeek for the First Time

-

DeepSeek has Released Janus-Pro-7B, Surpassing DALL-E 3 and Stable Diffusion in Image Generation Capabilities