Google's AI Learns to Analyze the World Through a Smartphone Camera

At MWC in Barcelona, Google introduced groundbreaking new features for its AI assistant, Gemini. Starting in March, subscribers to the Google One AI Premium plan will be able to transform their smartphones into AI-powered ‘eyes’ thanks to two key innovations—Live Video Analysis and Smart Screenshare.

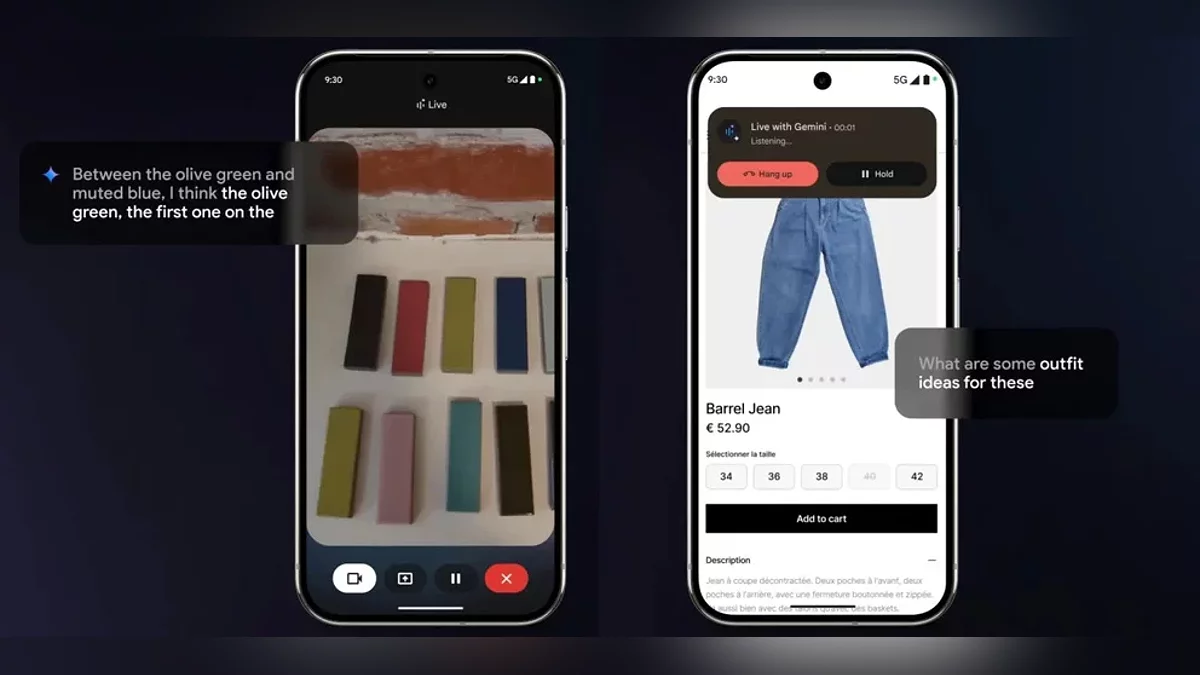

Live Video Analysis enables the assistant to process real-time camera input instantly. Users can point their camera at a piece of clothing for styling advice or scan a room to receive interior design suggestions. Gemini doesn’t just "see" what’s on the screen—it actively engages in dialogue. For instance, users can ask it to optimize a navigation route or clarify a complex chart in a presentation, receiving explanations in a dynamic, conversational format.

For now, these features are available only on Android devices with multilingual support. At the Google booth, the company showcased Gemini running on Samsung, Xiaomi, and other partner devices, emphasizing cross-brand compatibility. There’s no word yet on when iOS users will get access.

The announced updates are just one step toward Google’s ambitious Astra project. By 2025, the company aims to develop a universal multimodal assistant capable of:

- Analyzing video, audio, and text data simultaneously;

- Maintaining conversation context for up to 10 minutes;

- Integrating data from Search, Lens, and Maps for comprehensive solutions.

Although Google has not officially announced Astra as a standalone product, experts speculate that its features will gradually be integrated into Gemini, intensifying competition with ChatGPT. Notably, OpenAI has offered an expanded voice mode with screen analysis since December 2023, but Google is betting on deep integration with its own ecosystem.

The ability of AI to process visual information in real time is blurring the line between the digital and physical worlds. Users are no longer just interacting with a "talking assistant" but engaging with an active participant in their daily tasks—from shopping to learning. With the launch of Gemini Vision, AI assistants are entering an era of hyper-contextual interaction, where the key question shifts from "How do I ask?" to "What do I show?"

One major question remains: privacy. How will Google protect data transmitted through the camera and screen? The company assures that all analysis is conducted under strict security standards, but the full details will only be revealed once the features are officially released.

-

Google Chrome Goes on Autopilot: Browser Now Automatically Replaces Compromised Passwords

-

Google Play Protect Now Detects Threats in Real Time with Major Security Upgrade

-

Google Has Launched a «Silent War» on Ad Blockers: Popular Ad Blocking Tools Are Failing

-

Enhanced Protection 2.0: Google Chrome Introduces AI-Powered Defense Against Unknown Threats

-

Google releases first public beta of Android 16