NVIDIA Adopts GAA Transistors: Huang Promises a 20% Boost in Performance

During the GTC 2025 conference in San Jose, NVIDIA CEO Jensen Huang unveiled details of the company’s technological strategy, which merges semiconductor innovation with the development of a robust AI infrastructure. According to Huang, the transition to gate-all-around (GAA) transistors in the upcoming Feynman chips — expected by 2028 — will boost performance by up to 20%. However, the company is placing key emphasis on architectural and software solutions rather than just racing to adopt the latest manufacturing processes.

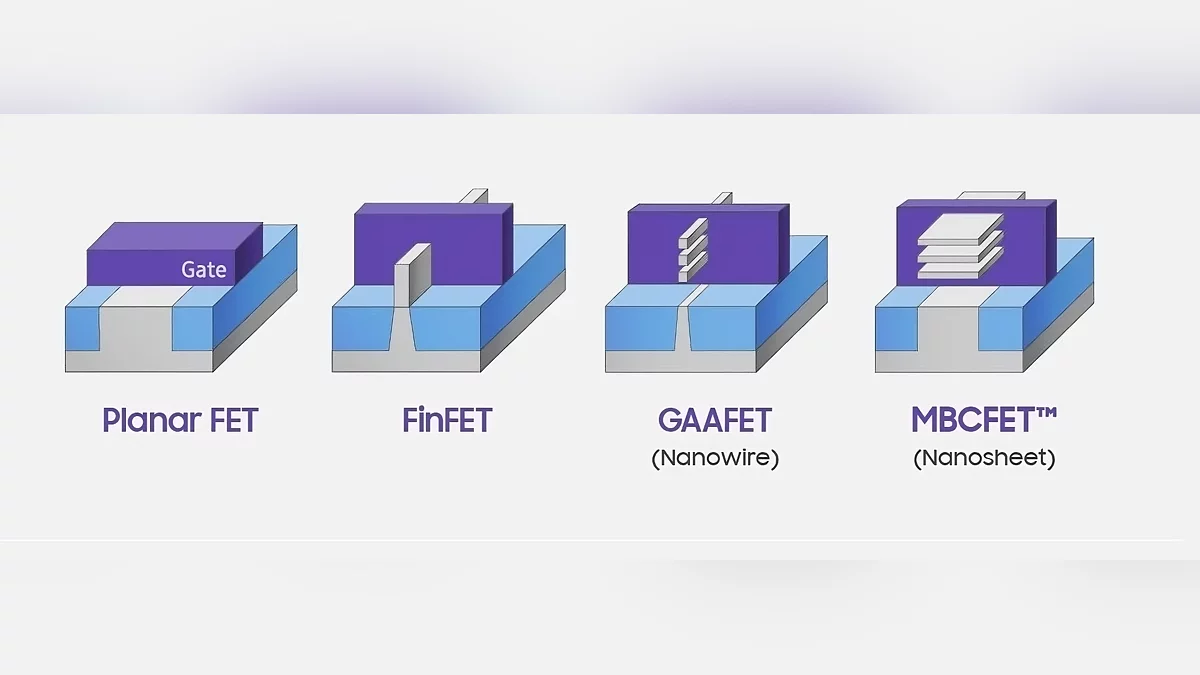

Huang noted that the era of revolutionary breakthroughs in lithography is coming to an end. Even the shift to the promising 2-nm nodes with GAA transistors, as estimated by TSMC, is projected to offer only a 10–15% improvement in performance. Nonetheless, NVIDIA — traditionally cautious about “raw” technologies — plans to use optimized versions such as N2P or A16 with reverse power delivery. Huang believes that this approach will achieve the claimed 20% gain by reducing resistance and stabilizing power consumption.

Huang was clear in stating that NVIDIA no longer sees itself as a traditional semiconductor manufacturer. Instead, the company is building a comprehensive AI infrastructure that encompasses algorithms for computer vision, robotics, and even chip design. One example of this strategy is the upcoming Rubin platforms (set for 2026) on the 3-nm N3P process, which will integrate the Vera GPU and a CPU into a unified architecture for data centers. Yet, as Huang points out, their full potential will only be realized when combined with proprietary frameworks like CUDA and Omniverse.

The buzz around GAA technology is well-founded, as these transistors are indeed capable of delivering a performance uplift. In GAA transistors, the gate completely surrounds the conductive channel, minimizing leakage and enhancing control. Analysts note that Huang’s statements reflect a broader industry trend: shifting the focus from merely increasing clock speeds to optimizing power efficiency and scalability. With the expansion of AI clusters, even a 20% chip-level boost could potentially double data center performance — provided that integration is handled smartly.

-

NVIDIA Unveils RTX PRO 6000 Series Graphics Cards with 96GB of Memory

-

NVIDIA unveils PC with 72-core CPU and Blackwell GPU

-

NVIDIA releases update for NVIDIA App with DLSS customization

-

Rumor: NVIDIA Plans to Launch Two Versions of GeForce RTX 5060 Ti in April

-

NVIDIA Defends RTX 50 Failure, Blames Users